In my last post on LLMs and the future of work, I noted that “you can organize work from the front line of an organization to the c-suite according to how abstract the information” folks in those roles deal with is.

I also noted that with LLMs, it becomes easier to deal with abstract information as a whole, which means that “the benchmark for expected higher-order skills…went up across the white-collar world.”

“AI is an abstraction layer over lower-order thinking,” which means “hiding away routine complexity to focus on higher-order thinking.”

‘AI Can Construct a Trading Strategy Now’, Evan Armstrong, [here].

This is all good and merry, but what does this mean for the day-to-day knowledge work?

After digging in, here are the four things I came up with.

- Agency at work will mean ‘doing’ more higher-order thinking.

- Knowledge workers who stand out will amplify their intelligence.

- All execution will mean managing multi-agent workflows.

- Learning and iterating will mean preparing data for continuous model training.

This sounds like a bunch of hogwash but stay with me.

Agency at work will mean ‘doing’ more higher-order thinking.

When I consider how my work has been impacted since I started using ChatGPT actively, I spend more time clarifying, explaining, and writing the higher-order details and context for the tasks and decisions than executing them.

This is so I can use what I have written to prompt ChatGPT to do the work I want it to do – writing, generating images, analyzing information, helping me brainstorm, or giving feedback on my decision.

What does this mean for knowledge work at scale?

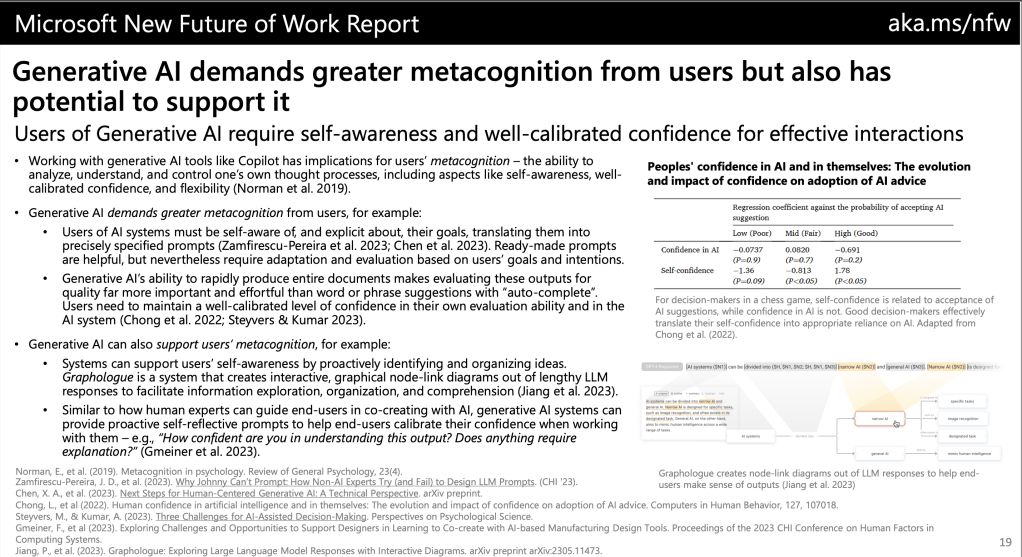

Writing good prompts comes directly from being able to clearly articulate your thoughts and intentions. The Microsoft Future of Work report puts it simply as “GenerativeAI demands greater metacognition from the user.”

In the case of, say, marketing or 0 to 1 projects, this would mean being able to clearly articulate details such as the business goals, objectives of any project, definition of success, and any constraints and guardrails, etc., for GenerativeAI to come up with a plan for execution, communications or marketing related copy, etc.

Knowledge workers who stand out will amplify their intelligence.

Let’s take the example of an AI-enabled marketing project to see what intelligence amplification looks like.

We know that to run a content campaign in 2024, any content marketing associate must begin with opening ChatGPT and prompting it to come up with a campaign calendar, a list of comms material required, and each individual marketing asset.

To guide it to do this, the content marketer must practice their metacognition skills and write a clear prompt with enough context, direction and guardrails for the AI output to be higher quality.

But it would be limiting if this is all that the marketers would use AI for.

Instead, before they ever ask it to generate any campaign content, content marketers could use ChatGPT to parse through user interviews and visualise key user persona insights for the campaign.

Along with this, they could use it to help them clearly define the metrics I should track for the success of this campaign, by prompting it to debate or brainstorm alongside them.

All of this would feed into their prompt to create the actual campaign calendar and content assets, and improve the quality of the AI generation.

Andrej Karpathy from OpenAI calls this intelligence amplification – link. In such an instance, GenerativeAI serves as a “tool of thought,” what Andrej calls “bicycles of the mind. Used like this, GenerativeAI amplifies intelligence just as well as imagination.

Suddenly that lone content marketer finds his intelligence amplified to focus on higher-order, more abstract objects like user persona, and simultaneously get real time feedback on their play with it.

This is a rather brute force illustration of intelligence amplification. It’s everywhere now with Co-Pilots, all the way from the GitHub Co-Pilot for coding, to Einstein, Salesforce’s sales Co-Pilot for their platform.

Other examples of this include tools like Humantic and Gong, that amplify intelligence with intelligence of the right kind and at the right time.

All execution will mean managing multi-agent workflows.

Agents are different from ChatGPT or intelligence amplification tools because they do not merely respond to prompts, instead they execute tasks by combining planning, memory and tools.

This means that they can execute more complex reasoning and perform repetitive tasks.

While cognitive agents have been cited as the source of great value unlock for at scale implementation of AI since 2019 (McKinsey), AI agents as I refer to them first became popular with AutoGPT or BabyAGI, and have been the hype ever since.

Companies like Staf.ai and AgentOps.ai is making AI agents available to end users directly.

But I am particularly excited about multi-agent workflows such as suggested by Microsoft’s AutoGen project.

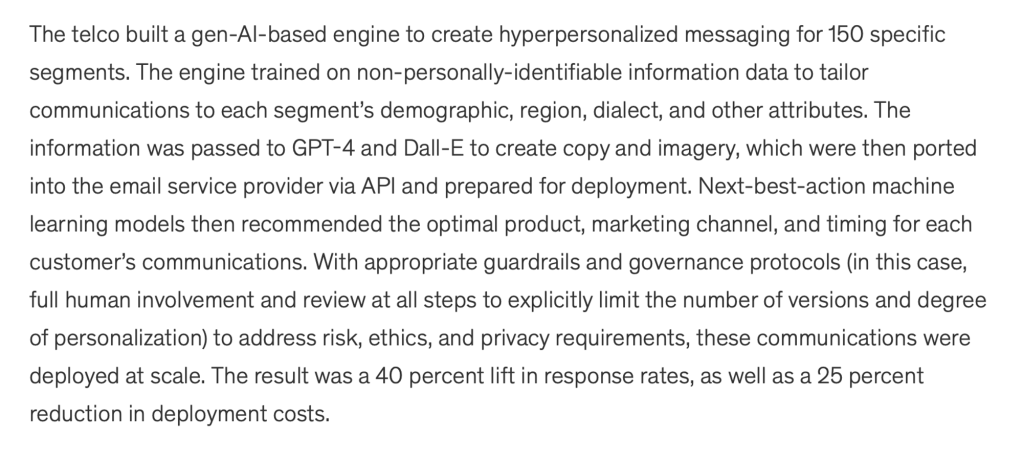

Here’s a great example of what such end to end AI agent workflows look like from McKinsey here about an European telecommunications company using customised GenAI workflows for marketing outreach.

Here’s a great Youtube video about building a multi-agent workflow for research. In this video, the maker creates three agents – a researcher, research manager, and research director – all using Microsoft AutoGen and OpenAI Assistant’s API to update an airtable database of accounts.

The way that I think this will come to life for my job is using multiple agents for tasks ranging from ‘reading data from marketing analytics tools and synthesising actionable insights’ to constructing and creating end to end campaigns, as a campaign manager or content writer. My job would then be to make sure that these agents are all working together productively.

I don’t know if I can say the same about coding and portfolio management, but you can imagine portfolio management as managing a series of agents intercepting data from the Bloomberg terminal and making allocation/withdrawal decisions, etc.

And if I am to go by what Chris Lattner says about the future of programming and software engineering here, then I’m not too far off.

I think another great perspective on this comes from Dan Shipper. In his post about the end of the knowledge economy here, he posits that with AI doing much of the grunt work in most knowledge jobs, everybody will be forced “into the role of manager-model manager” and that we’ll be “allocating work to AI models and making sure the work gets done well.”

I think he paints a broad brushstroke when he groups the various changes happening at work under an umbrella as wide as all of us, going from makers to managers.

Still, he captures something central about the coming shift: Much more of our time will be spent allocating resources.

This makes sense when you consider that much of marketing today, outside of the more intuitive parts about connecting, understanding, and knowing your customers, is already about allocating money or budget to different channels to minimize the CAC for a new user. You can see some of this already happening with tools like Tenon, which were built primarily for marketing teams, as noted here.

I do not think we will all be model managers. I will not choose what models I want to use. I think a lot of this model complexity will be abstracted behind agents, and we will all indeed be agent managers.

Learning and iteration will be as literal as ever.

I am most excited about this part. It concerns the process of iteration and learning in knowledge work. How do I learn at my job today?

Let’s take the example of a particular piece of content performing well or not. To get better at content creation, I first make a deliberate effort to set up some structured approach (a hypothesis of what will work) while conceptualizing the content to create. Then, I go ahead and make the content, and lastly, I note how it performs on some predetermined metrics. It is this last step that I am most interested in.

When I do this currently, a lot is left to my intuition. Of course, we have been on a trajectory of introducing more parameters to make this intuitive decision-making more informed, which is aligned with what I have spoken about before regarding intelligence amplification above.

However, incorporating coach marks or insights into your decision-making will not be enough in the future. Instead, you must be more explicit about making your learnings more detailed to complete the feedback loop for GenAI and other AI/ML models.

What does this look like?

Some real-world examples include marketers engaging in data labelling work to build the user modelling processes necessary to implement AI and ML models in workflows and enabling what Instacart here calls a feature store.

Another example is from below: a screenshot of how companies put IBM’s Watsonx.ai, IBM’s next-generation enterprise studio for AI builders to train, validate, tune, and deploy AI models.

The rise of co-audit tools is the third way this is coming to life. Microsoft has done much work on this, and I am excited to go into more details eventually.

This is another instance in which I think I deviate from Dan Shipper’s vision of the knowledge economy in the future.

While intelligence amplification or the abstraction layer of lower-order knowledge work is mostly about allocation, the bit about clearly articulating your vision or coming up with the analogs of hyperparameters or features for your decisions is different from allocation.

This requires higher-order intelligence skills like critical thinking, discursive analysis, etc. Building taste is different from building intuition for allocative/prioritizing decision-making.

Some references that even I have to go deeper into:

- A detailed analysis of what metacognition looks like at the workplace is available in the paper “Viktor Kewenig and Lev Tankelevitch. 2023. Think, evaluate, adapt: The metacognitive demands of generative AI.” (Link)

- Here’s a great paper on Co-Audit tools from Microsoft for those who are curious (link).